KnoBAB

A fast LTLf Log-SAT Solver and miner with Data Payload

KnoBAB provides an ecosystem for temporal data analysis, supporting both multivariate time series analysis and log data. Both of these data models are represented into an internal columnar database enabling fast data querying and mining, while outperforming state of the art algorithms.

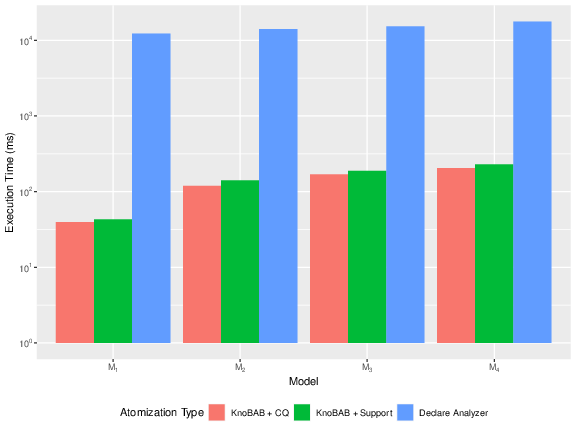

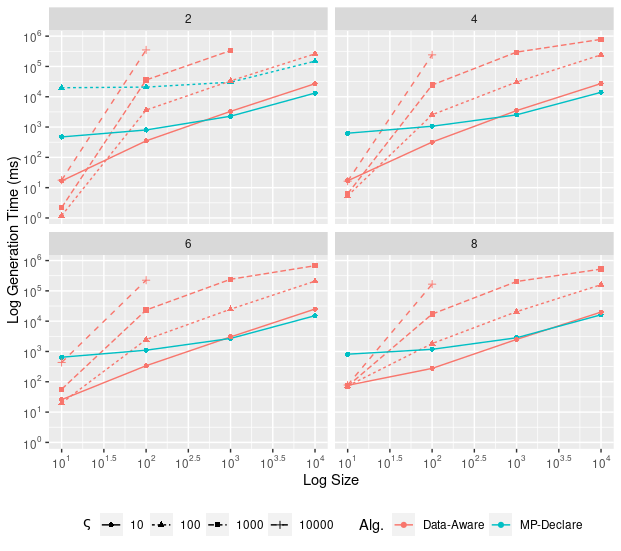

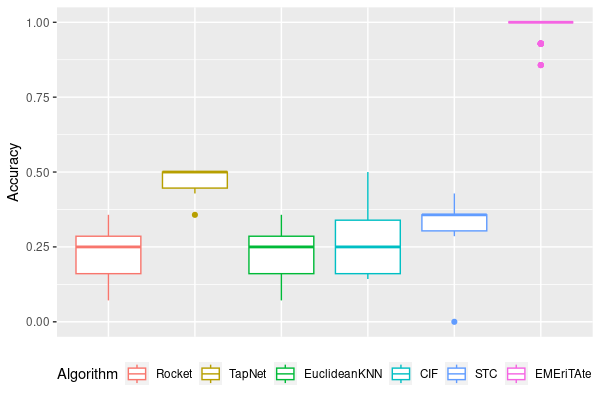

Bechmarks showing that our solutions outperform state of the art data-aware formal verification (left: (Bergami et al., 2023)), formal synthesis (center: (Bergami, 2023)), and multivariate time series classification (right: (Bergami et al., 2024)) solutions.

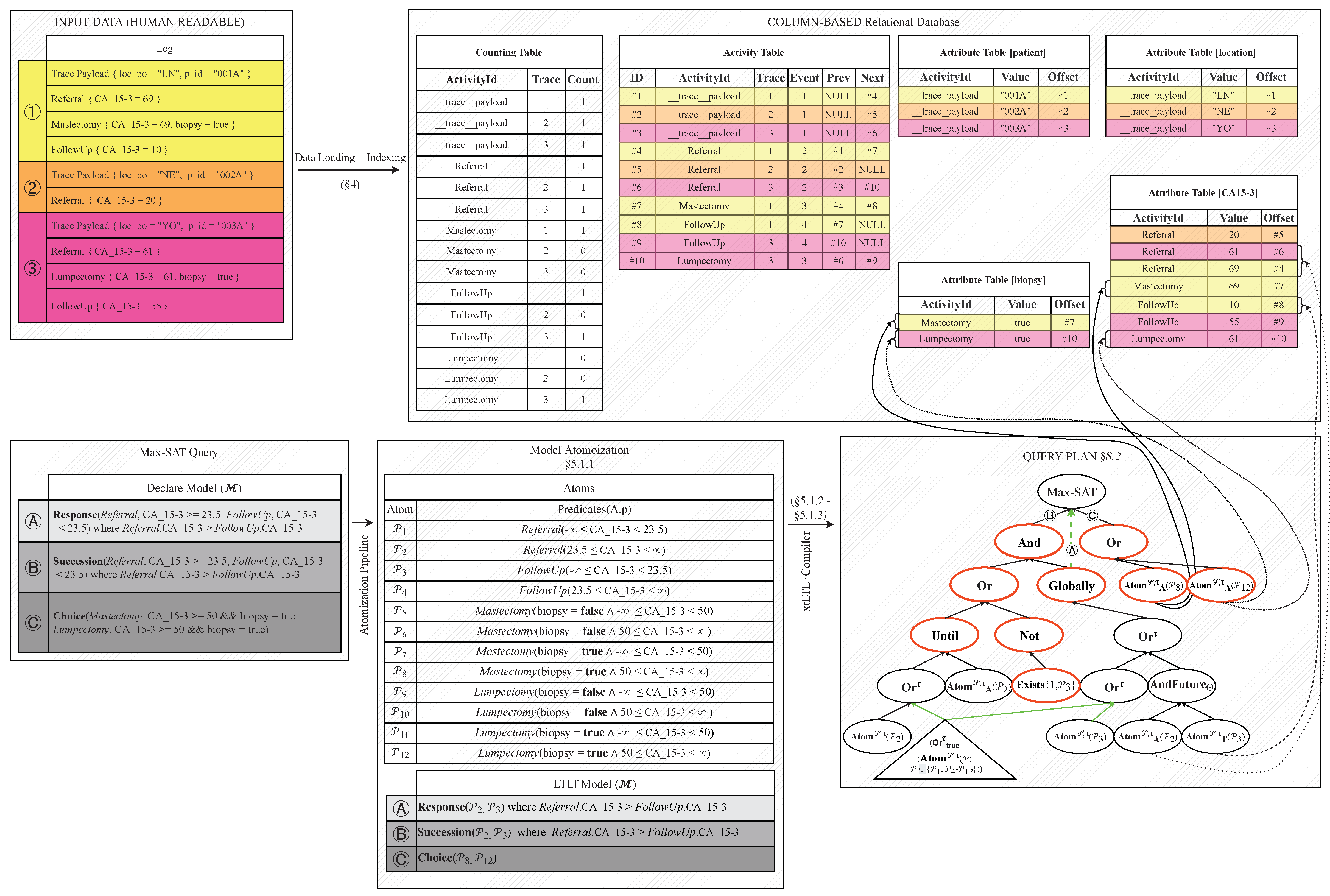

Temporal data is loaded into a columnar database (above), while declarative queries are rewritten into algebraic temporal operators (below) (Bergami et al., 2023).

While specification mining, formal verification, and formal synthesis tasks need the prompt interaction with C++ code, we are working towards fully-supporting the Multivariate Time Series classification task in Python, for which we now provide a separate Python pipeline via EMeriTAte.

Invited Talks and Presentations

- Giacomo Bergami. “Apprentissage Relationnel: La Logique et les Algorithmes remettent en cause les techniques d’apprentissage profond”. LIFO – Universitè d’Orléans, 24ᵗʰ of April, 2024.

- Giacomo Bergami. “KnoBAB: Making Logic Fast” LTf@AAAI-SSS, 29ᵗʰ of March, 2023

- Giacomo Bergami. “KnoBAB: Fast Business Process Management with Temporal Reasoning” North East Data Scientist MeetUp, 16ᵗʰ of March, 2023